What is augmented reality (AR) and how does it work?

August 21, 2018

What is augmented reality (AR) and how does it work?

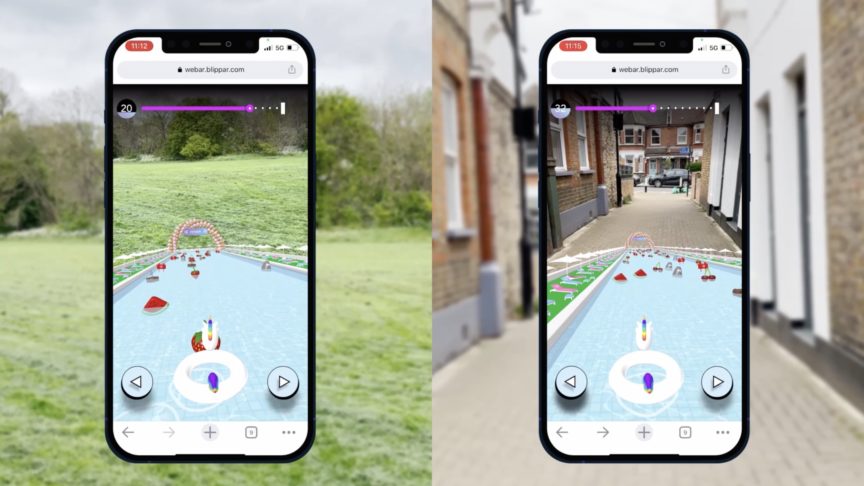

Augmented reality (AR) adds digital content onto a live camera feed, making that digital content look as if it is part of the physical world around you.1

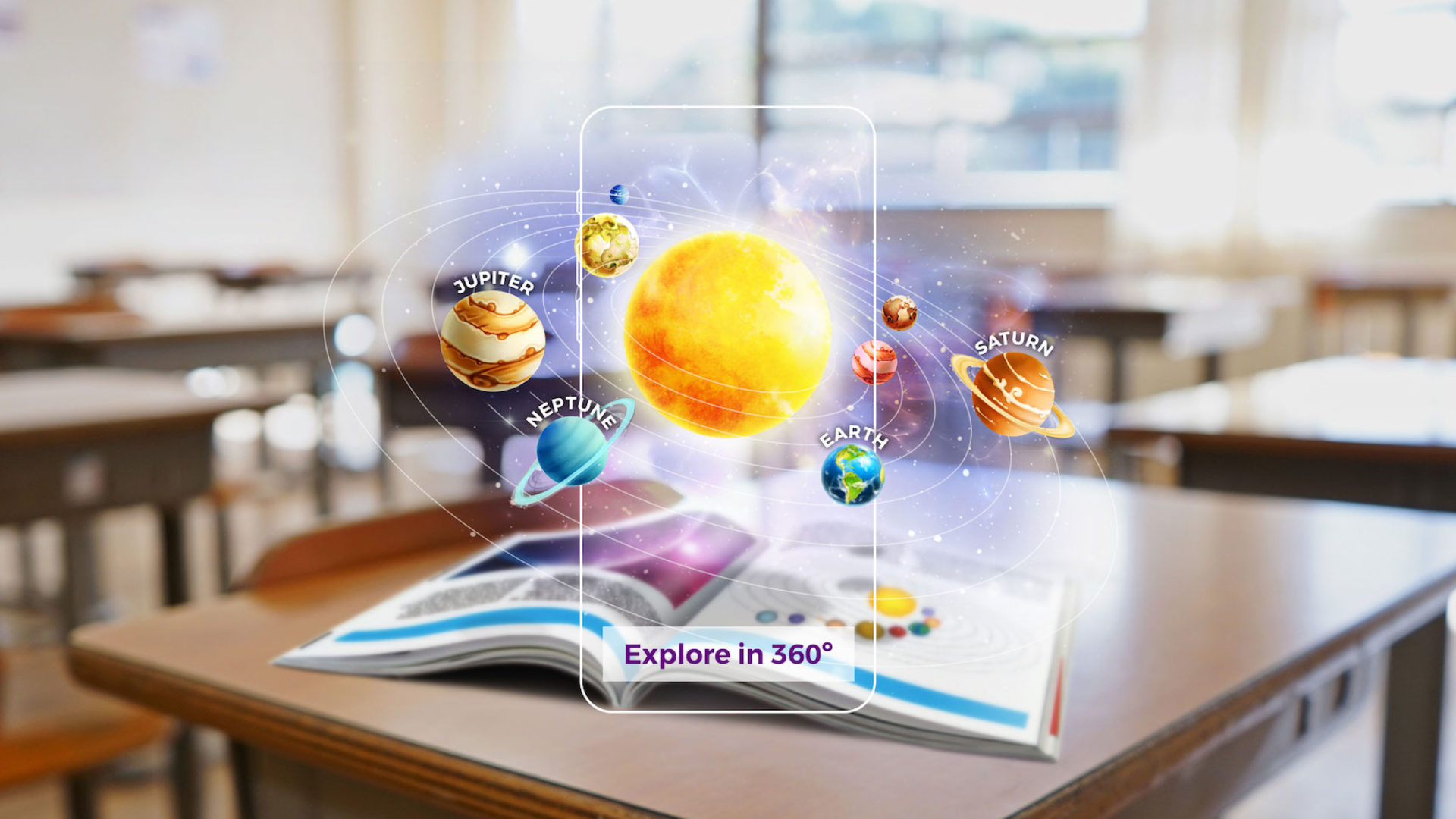

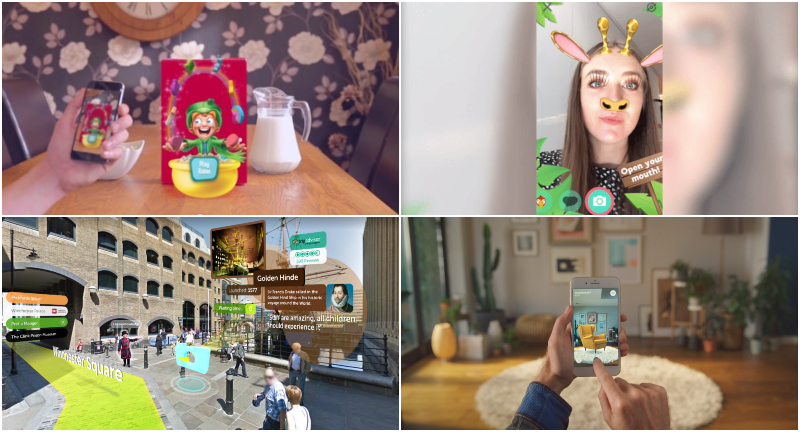

In practice, this could be anything from making your face look like a giraffe to overlaying digital directions onto the physical streets around you. Augmented reality can let you see how furniture would look in your living room, or play a digital board game on a cereal box. All these examples require understanding the physical world from the camera feed, i.e. the AR system must understand what is where in the world before adding relevant digital content at the right place. This is achieved using computer vision, which is what differentiates AR from VR, where users get transported into completely digital worlds. Read on to find out…

-

How does augmented reality work?

-

Why does AR need computer vision?

-

How does AR display digital content?

How does augmented reality work?

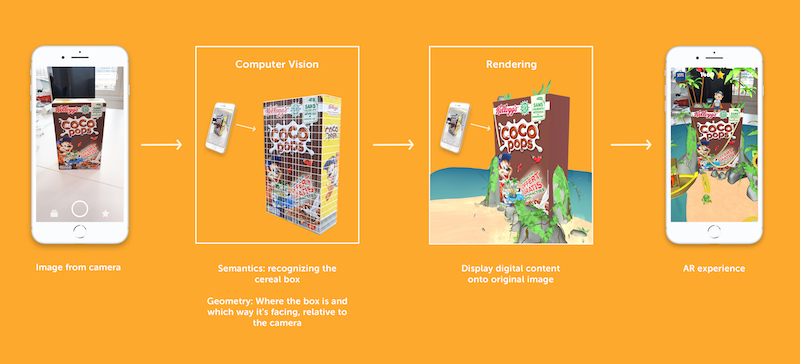

So now that you know the meaning of AR, how does it work? First, computer vision understands what is in the world around the user from the content of the camera feed. This allows it to show digital content relevant to what the user is looking at. This digital content is then displayed in a realistic way, so that it looks part of the real world - this is called rendering. Before breaking this down into more detail, let’s use a concrete example to make this clearer. Consider playing an augmented reality board game using a real cereal box as the physical support like in the figure below. First, computer vision processes the raw image from the camera, and recognizes the cereal box. This triggers the game. The rendering module augments the original frame with the AR game making sure it precisely overlaps with the cereal box. For this it uses the 3D position and orientation of the box determined by computer vision. Since augmented reality is live, all the above has to happen every time a new frame comes from the camera. Most modern phones work at 30 frames per second, which gives us only 30 milliseconds to do all this. In many cases the AR feed you see through the camera is delayed by roughly 50 ms to allow all this to happen, but our brain does not notice!

Why does AR need computer vision?

While our brain is extremely good at understanding images, this remains a very difficult problem for computers. There is a whole branch of Computer Science dedicated to it called computer vision. Augmented reality requires understanding the world around the user in terms of both semantics and 3D geometry. Semantics answers the “what?” question, for example recognizing the cereal box, or that there is a face in the image. Geometry answers the “where?” question, and infers where the cereal box or the face are in the 3D world, and which way they are facing. Without geometry, AR content cannot be displayed at the right place and angle, which is essential to make it feel part of the physical world. Often, we need to develop new techniques for each domain. For example, computer vision methods that work for a cereal box are quite different from those used for a face.

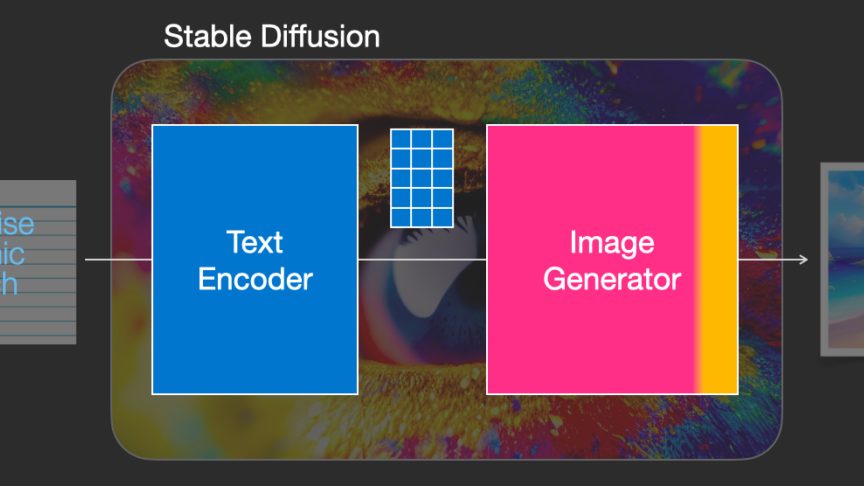

Semantics and geometry of the world. Traditionally, computer vision techniques used for understanding these two aspects are quite different. On the semantics side we have seen much progress thanks to Deep Learning, which typically figures out what is in an image without worrying about its 3D geometry. On its own, it enables basic forms of AR. For example, whenever computer vision recognizes an object we could display relevant information floating on the screen, but it will not look anchored to the physical object. To do this would require the geometric side of Computer Vision, which builds on concepts from projective geometry2. In the cereal box example we need to know its position and orientation with respect to the camera to anchor the AR game correctly when we display it.

How does AR display digital content?

For each augmented reality experience we need to define some logic beforehand. This specifies which digital content to trigger when something is recognized. In the live AR system, upon recognition the rendering module displays the relevant content onto the camera feed, the last step in the AR pipeline. Making this fast and realistic is very challenging, particularly for wearable displays like glasses (another very active area of research). Another way to explain how AR works is to consider computer vision as inverse rendering. Intuitively, computer vision recognizes and understands the 3D world from a 2D image (there is a face and where it is in the 3D world), so that we can add digital content (a 3D giraffe mask anchored to the face) that is then rendered onto the 2D phone screen.

AR is a very active field, and in the future we expect to see many exciting new developments. As computer vision gets better at understanding the world around us, AR experiences will become more immersive and exciting. Moreover, augmented reality today lives mostly on smartphones, but it can happen on any device with a camera. When enough computational power will be available on AR glasses, we expect this medium to make AR mainstream - enhancing the way we live, work, shop and play.

Ready to create augmented reality? Try our drag-and-drop AR creator tool, Blippbuilder -- no coding skills required -- or our developer tool, Blippbuilder Script. Drop us a line if you have any questions.

1There are other forms of augmented reality involving different senses, e.g. haptics or auditory, but we focus here on the mainstream applications of AR: visual.

2 We are starting to see Deep Learning being used also on the geometric side, and we expect to see more and more in the future.