AlphaGo – A Step in the Right Direction for Artificial Intelligence

March 14, 2016

AlphaGo – A Step in the Right Direction for Artificial Intelligence

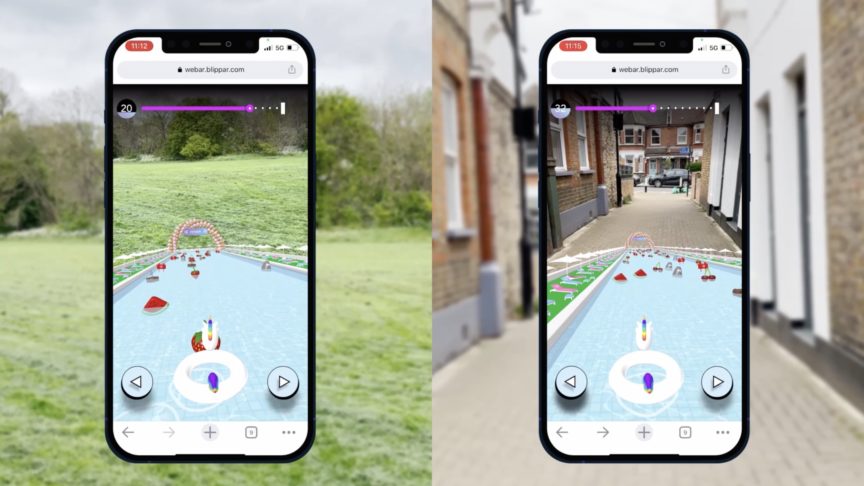

Written by Alykhan Tejani, Lead Research Engineer at Blippar.

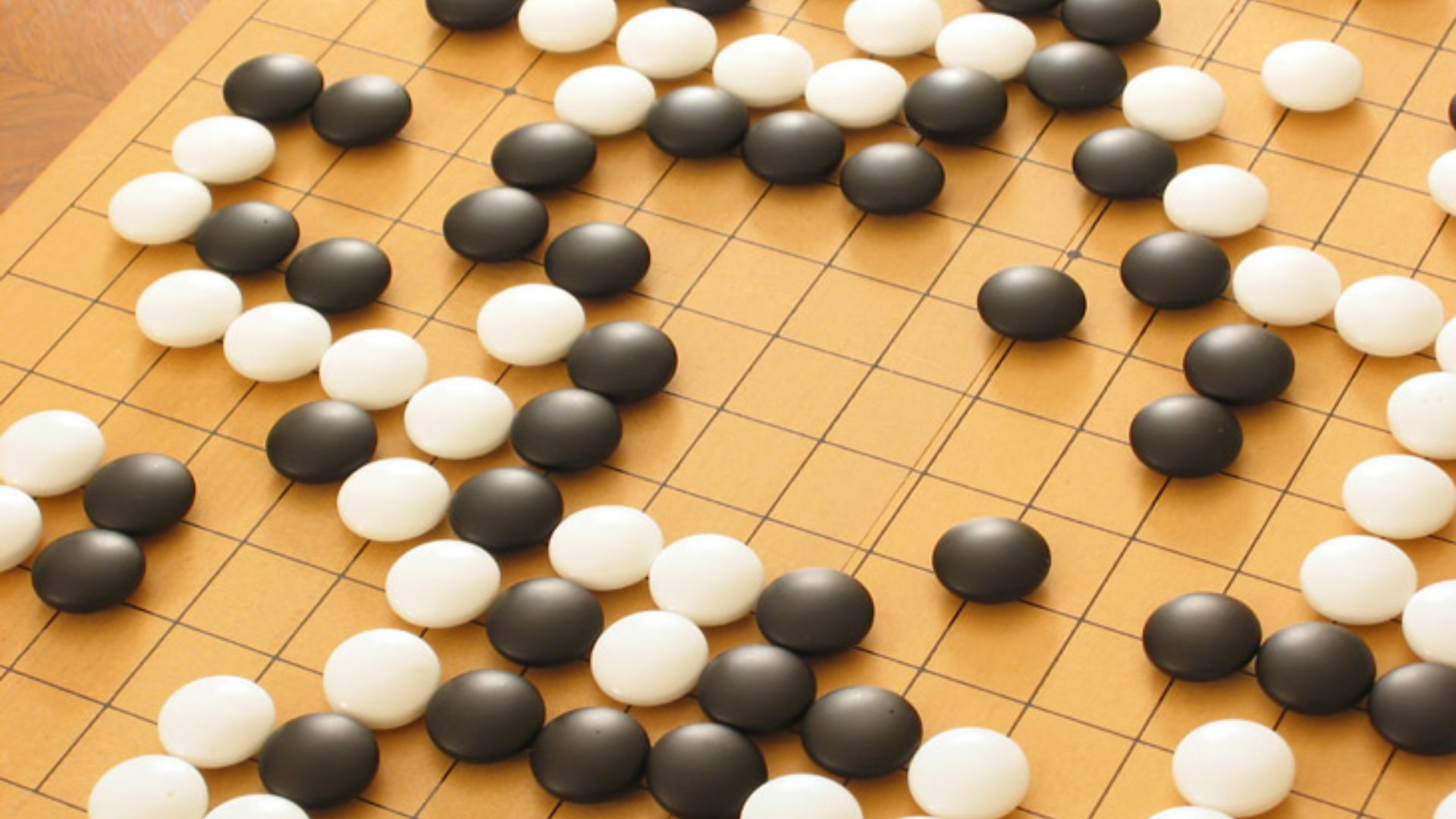

May 11th, 1997, marks a significant day in the history of artificial intelligence (AI). On this day IBM’s Deep Blue became the first computer program to beat a reigning world champion in a six-game Chess match. Almost 19 years later, on the March 12th, 2016, Google DeepMind’s AlphaGo has defeated Lee Sedol, one of worlds best Go players, in a best-of-five match, a feat many researchers believed was still a decade away.

Go, like Chess, is a zero-sum, perfect information game that dates back 2,500 years to ancient China. Despite its relatively simple rules and straight forward objective (try to surround territory and try to avoid being surrounded), Go is an extremely complex game. For example, the number of possible games of Go is ~10700, far more than the number of atoms in in the observable universe (~1080), ruling out any brute-force approaches. This complexity is the reason it has taken 19 years for computers to go from winning at Chess to winning at Go, and even that is a remarkable feat.

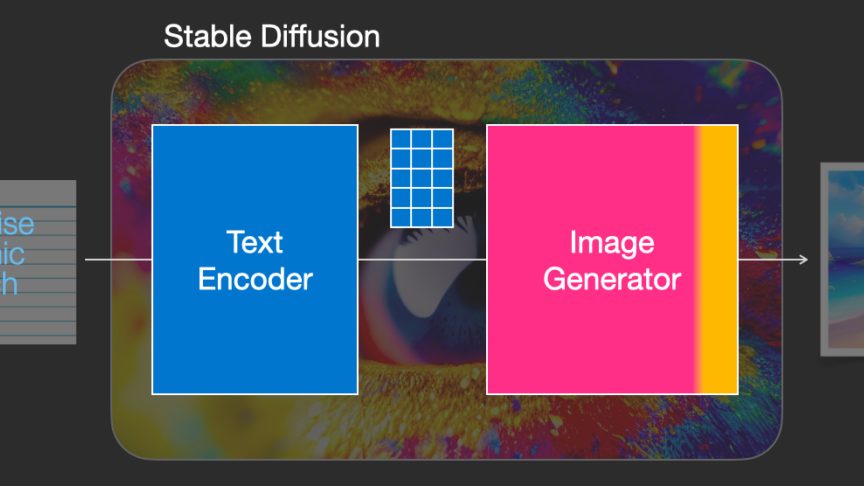

AlphaGo is based on deep neural networks, a technique which has become very popular in the machine learning community in the last few years. At a high level, AlphaGo first learned to play Go as an expert by learning from publicly available game histories of experienced players. Once it had achieved a high-level of play, AlphaGo then played against itself many millions of times and learned from its own mistakes, eventually reaching super-human levels, or at least enough to convincingly beat one of the worlds best human players.

The most unusual and beautiful thing about watching AlphaGo play was that it made many moves that would be considered weird and unorthodox, yet still came out on top. It is important to remember that AlphaGo is trained with one objective, and that is to win the game. AlphaGo is not concerned about winning convincingly, and furthermore AlphaGo has learned to achieve this objective from the raw data, meaning it has never been taught any commonly known strategies. This approach to learning allowed AlphaGo to develop its own strategies and insights, and is what makes watching it so fascinating and playing against it so difficult.

It’s no doubt that this is a monumental achievement for the AI community as a whole, but it is important for us to remember that while AlphaGo may be a superior Go player than the amazing Lee Sedol, when it comes to absolutely everything else, Lee Sedol is infinitely better than AlphaGo. These results are an amazing achievement and everybody involved should feel extremely proud, and this is definitely a huge step toward true AI. However, for all the Skynet-fearing humans out there, worry not. We still have a long, long way to go before the machines take over.