What is Computer Vision? Human Vision V.S. Computer Vision

August 2, 2016

What is Computer Vision? Human Vision V.S. Computer Vision

Following on from the first two posts which looked specifically at human vision, in the next two posts we are going to compare and contrast human and computer vision. This first post will look at objectives, biases and the different ways light is received by either system. You’ll see that that there are some similarities between the two systems. This may not be surprising since, in creating computer vision technology, humans haven’t had that many other visual systems to gain inspiration from!

First off, the objectives of the two systems are the same: to convert light into useful signals from which to construct accurate models of the physical world. Similarly, when considered at a high level, the structures of human and computer vision are somewhat similar: both have light sensors which convert photons into a signal, a transfer mechanism to carry the signal, and an “understanding place” where the signal is interpreted.

But when you trace the path of a light signal through a computer vision technology vs a human vision system, many differences emerge. Let’s start even before any light has reached the sensors of either system. Does either system have any inbuilt biases as regards what it “wants” to see?

The answer is yes for both, but different biases.

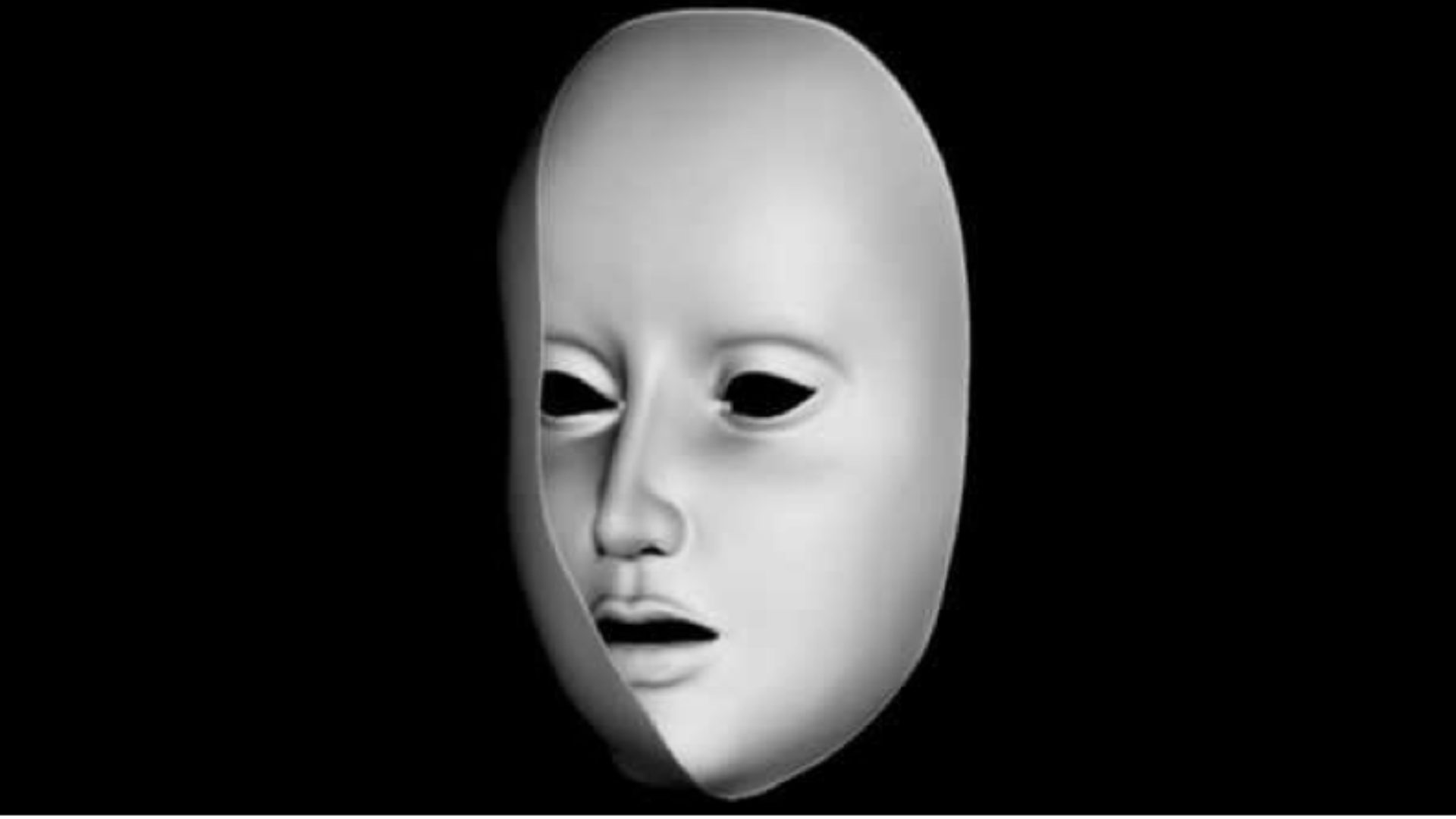

Human vision is extremely sensitive to other human faces. It sees faces when they are not really there.

And take a look at the famous rotating mask illusion. Your visual system is shown very explicitly that what you should see is a concave, rotating mask seen from the back. But your visual system won’t play ball! It says “No! I’m looking at normal convex face seen from the front!”

Computer vision systems can be trained to see human faces but they have nothing like the same inbuilt bias for seeing them when they may or may not even be there.

But computer vision technology has its own biases and can be fooled, as this great video explains. The nature of these biases is different and often revolve around over-generalizing on the basis of a specific aspect of an image. Studying these biases is inspiring new approaches to computer vision technology.

How about when we think about light arriving at the sensors? Well another difference is in relative field of view. Human field of view is 220 degrees, ie everything that is in front of us and a little bit more. But computer vision systems can have 360 degree field of view, and there is no “front” and “back”.

And there’s another difference related to field of view. Computer vision technology is mostly uniform across all parts of the field of view. Compare this with human vision, where what we are best at seeing varies across the field of view. For instance, humans tend to see colour better at the centre of the visual field. Conversely we can detect low light objects better at the periphery. You might have noticed this if you’ve ever seen a very faint star out of the corner of your eye but then been unable to see it when you turn your gaze to try to look straight at it. Likewise, the periphery of the human field of vision is especially attuned to movement, more so than the centre.

Another difference: there are two types of light sensor in the human visual system whereas computer vision sensors don’t have this specialization. Sensor cells on the human retina brake down into rods and cones.

Rods are way more numerous (outnumbering cones about 20:1) and are more sensitive to the overall light level -- meaning they are the ones we use to see in low light conditions. They are also more concentrated outside the centre of the field of view allowing us to see in the dark but delivering lower visual resolution.

Cones can see different colours and deliver stronger resolution, but don’t work in the dark and are more found in the centre. So while there is a similarity between computer vision technologies and human vision in that both have millions of tiny light sensors, beyond this basic point, the sensors for each system are pretty different.

Hopefully this post comparing human and computer vision has given you a sense of the some of the differences and similarities in terms objectives, biases and sensing structures between the two systems. In the next post, we’ll compare how the signal created by sensors is transmitted and ultimately understood across human vision and computer vision.

If you'd like to learn more, please contact us via this form. We'd love to hear from you.

Please click here for part 4 of this series around Computer Vision

Note: Some newer approaches to computer vision use “attention models” in which the technology is trained to focus on particular parts of the image.