What is Computer Vision – Post 5: A Very Quick History

October 4, 2016

What is Computer Vision – Post 5: A Very Quick History

This is the 5th post in a series looking at computer vision for non-technical people (which is what I am BTW). You can read this post in isolation but the first four posts will provide more context. This post takes a historical look at computer vision.

The field of computer vision emerged in the 1950

One early breakthrough came in 1957 in the form of the “Perceptron” machine. This “giant machine thickly tangled with wires” was the invention of psychologist and computer vision pioneer Frank Rosenblatt.

Biologists had been looking into how learning emerges from the firing of neuron cells in the brain. Broadly the conclusion was that learning happens when links between neurons get stronger. And the connections get stronger when the neurons connect more often.

Rosenblatt’s insight was that the same process could be applied in computers. The Perceptron used a very early “artificial neural network” and was able to sort images into very simple categories like triangle and square. While from today’s perspective Perceptron’s early neural networks are extremely rudimentary, they set an important foundation for subsequent research.

Another key moment was the founding of the Artificial Intelligence Lab at MIT in 1959. One of the co-founders was Marvin Minsky and in 1966 he gave an undergraduate student a specific assignment. “Spend the summer linking a camera to a computer. Then get the computer to describe what it sees.” Needless to say, the task has proved a little more difficult than one grad student can achieve over a single summer!

Minsky’s timelines were over-confident. But there was progress, for example the understanding of where the edges of an object within an image are. The 70s saw the first commercial applications of computer vision technology. Optical Character Recognition (OCR) makes typed, handwritten or printed text intelligible for computers. In 1978, Kurzweil Computer Products released its first product. The aim was to enable blind people to read via OCR computer programs.

The 1980s saw expanded use of the artificial neural networks pioneered by Frank Rosenblatt in the 50s. The new neural networks were more sophisticated in that the work of interpreting an image took place over a number of “layers”.

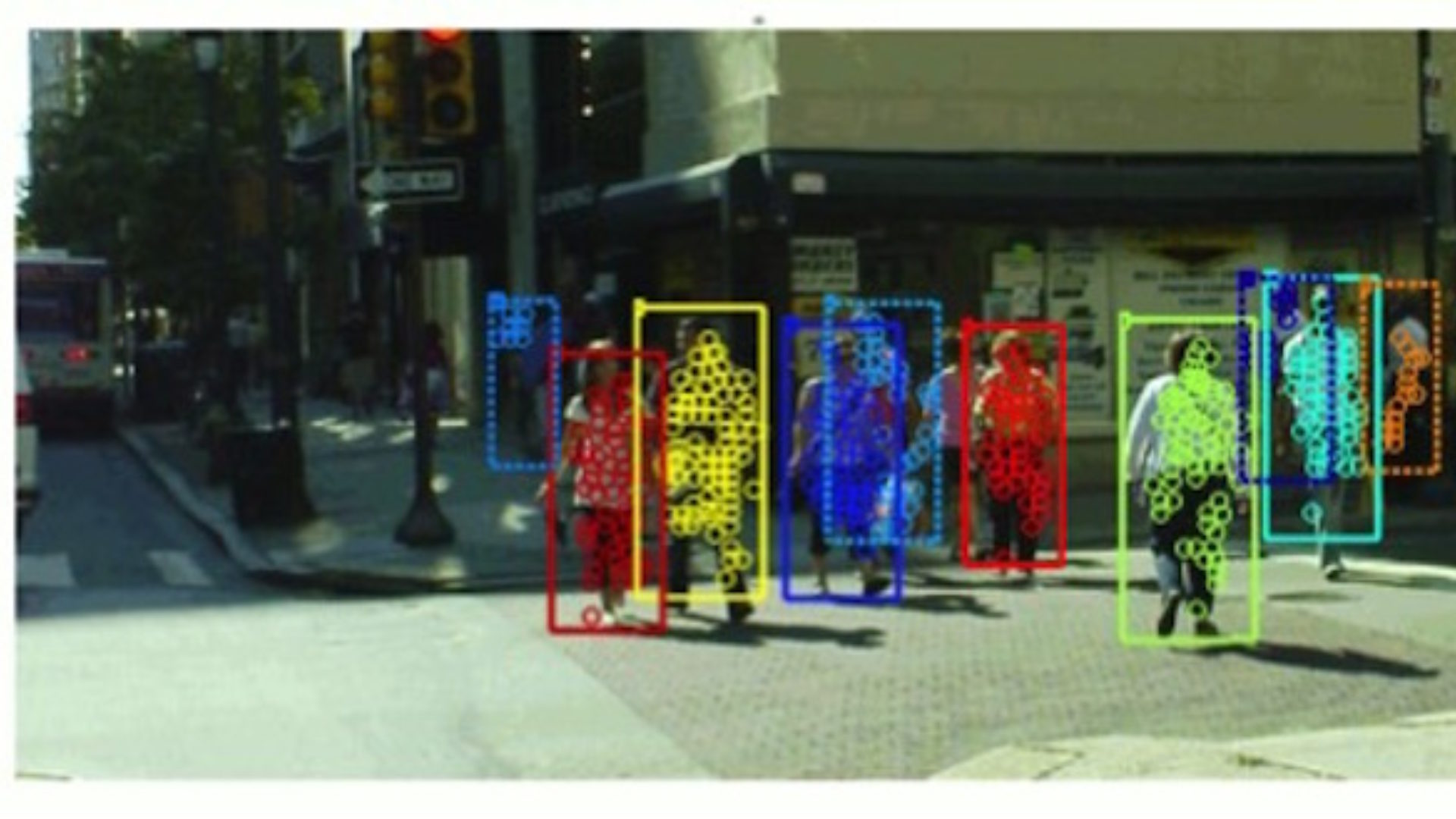

Things started to heat up in the second half of the 90s. This decade saw the first use of statistical techniques to recognize faces in images; and increasing interaction between computer graphics and computer vision. Progress accelerated further due to the Internet. This was partly thanks to larger annotated datasets of images becoming available online. And in the past decade that progress with computer vision has taken off. Two underlying reasons have driven the breakthroughs: more and better hardware; and much, much more data.

1. Hardware. There are two parts to this. Firstly, computing power simply became more abundant and cheaper. For example, when a Google AI learnt to identify cat faces in YouTube videos they had 16,000 computers working together to do it.

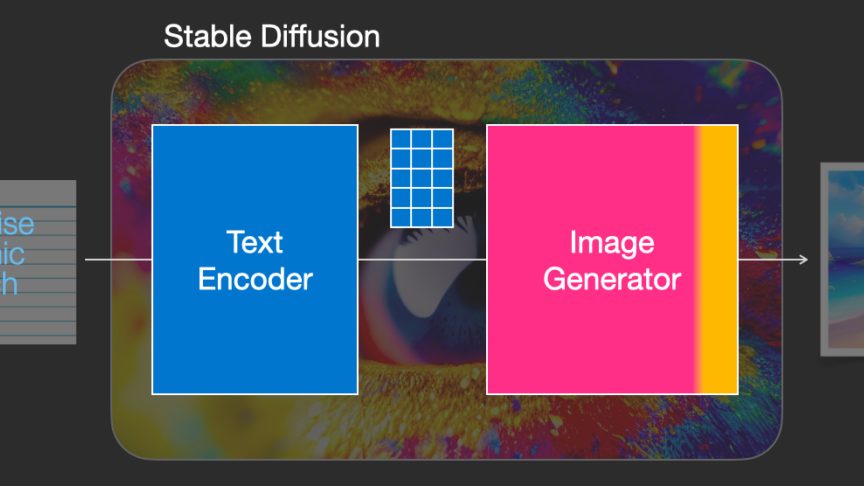

The second aspect is that the hardware has become better designed for computer vision tasks. My next post will focus in more detail on AI and deep learning but here’s a quick taster. The Graphics Processing Unit (GPU) is a chip originally designed for the gaming industry. It turns out also to be very useful in computing. GPUs have unlocked the potential of neural networks as an approach to computer vision (and in fact other tough AI problems).

2. More data to feed into the system. In the same cat example, Google fed these 16,000 computers 10 million videos. The quantity of available data has led to qualitative improvements in computer vision algorithms.

The story of computer vision is in many ways a story about artificial neural networks. In extremely rudimentary form, they underlay early progress. Powered by GPUs and tons of data, deep neural networks are driving progress today. As progress has accelerated, so has investor excitement - the computer vision market is now estimated to reach $50 bn by 2022.

Hopefully this look back has whetted your appetite. AI, machine learning and deep neural networks to follow in the next post!